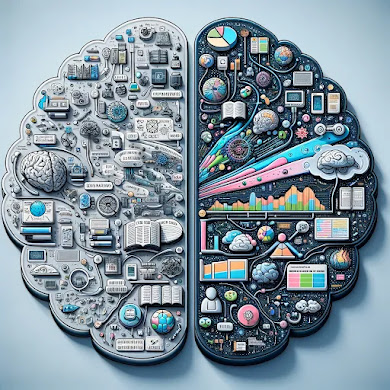

The Vanguard of Natural Language Processing

In the evolving landscape of natural language processing (NLP), two models stand at the forefront of innovation: GPT (Generative Pre-trained Transformer) and LAMDA (Language Modeling with Discrete Attributes). These models have revolutionized how we create text-based content, from weaving intricate short stories to crafting engaging articles and simulating realistic conversations. This exploration delves into the distinct mechanisms and capabilities of GPT and LAMDA, offering insights into their unique advantages and applications through detailed examples.

GPT: The Architect of Linguistic Mastery

GPT, leveraging the transformer architecture, has redefined content generation with its vast pre-trained language model. Trained on an extensive corpus of text, GPT exhibits an unparalleled ability to mimic the training material's style and substance, producing text ranging from academically sound articles to creatively charged stories. The model's strength is generating grammatically polished and contextually relevant text across various genres, making it a versatile tool for numerous applications.

Applications and Examples:

Story Generation: GPT has been instrumental in generating captivating short stories that mirror human creativity. For instance, given a prompt about a lost civilization, GPT can spin a tale complete with character development, setting, and plot twists while maintaining a coherent narrative flow.

Automated Journalism: In digital journalism, GPT excels by producing well-researched articles on various topics, from technology trends to global events. It analyzes existing literature to present comprehensive reports, making it an invaluable asset for news agencies seeking to augment their content production.

One of GPT's major advantages is its text generation efficiency, making it an ideal solution for real-time applications like chatbots, where rapid response times are crucial. Furthermore, its capacity to adhere to grammatical norms and adapt to different writing styles allows for high-quality content tailored to specific needs.

LAMDA: The Connoisseur of Custom Content

In contrast, LAMDA introduces a novel approach utilizing discrete attributes to shape its text generation. Unlike GPT, which draws upon a vast pre-existing language model, LAMDA crafts text by considering specific attributes such as genre, topic, and sentiment. This method enables LAMDA to produce content that aligns with the user's preferences and retains the richness and diversity of human-written text.

Applications and Examples:

Personalized Marketing: LAMDA shines in creating targeted marketing content that resonates with different audience segments. Adjusting its attributes for sentiment and topic can generate compelling product descriptions and advertisements that appeal to consumers' emotions and interests.

Interactive Conversations: LAMDA's ability to tailor conversations according to discrete attributes makes it exceptionally suitable for customer support chatbots. It can vary its responses based on the customer's mood or the conversation's subject, providing a personalized experience that enhances customer satisfaction.

LAMDA's main advantage lies in its capability to generate customized content catering to individual preferences and requirements. This makes it particularly useful for applications demanding a high degree of personalization, such as customer service and targeted content creation. Additionally, like GPT, LAMDA offers the benefit of rapid content generation, meeting the needs of time-sensitive applications.

Conclusion: Charting the Future of Text Generation

GPT and LAMDA represent significant milestones in natural language processing, each bringing unique strengths to the table. With its broad applicability and proficiency in generating coherent and diverse text, GPT is well-suited for tasks requiring speed and versatility. Meanwhile, offering unparalleled customization with its attribute-driven approach, LAMDA opens new possibilities for personalized content creation. As we continue to explore and refine these models, the future of text generation looks promising, promising ever more innovative applications and deeper interactions between humans and machines.

0 Comments